Security 404 - Disinformation Injection Attacks

Disinformation isn’t just a social engineering attack, it’s an buffer overflow attack of the mind.

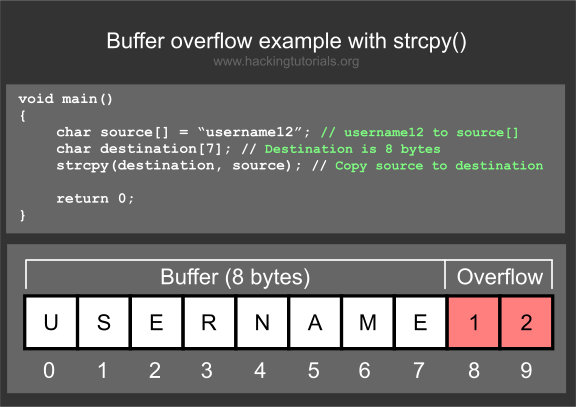

Most people may not be familiar with an information security vulnerability called a buffer overflow. Here’s a small example:

Buffer Overflow attacks work when a program needs to accept input from the user (think of a program that asks for your username, like the example above). The issue is that the programmer uses a function like strcpy() where the size of the destination is not specified. The problem here is two fold: 1. If you throw enough data into this input area, it can crash, resulting in a Denial of Service condition. 2. If you craft a specialized request, taking into account memory allocations, you can trick the program into running almost any code you want it to run.

The definition of disinformation is as follows:

false information which is intended to mislead, especially propaganda issued by a government organization to a rival power or the media.

Oddly enough (or maybe not so odd) is that the word appears to be a Cold War era term originating in the 1950s from the Russian dezinformatsiya.

Putting it together

No matter what side of the political fence you may be on buffer overflow attacks exist. It doesn’t have to do with politics but with programming techniques that an attacker can take advantage of, effectively altering the behavior of a program.

Similarly I would posit that the information in our heads can have similar rules as the vulnerable functions programmers sometimes use that end up succumbing to these buffer overflow attacks.

Whether you label the approach disinformation, mind control, far (left/right) theory, spin, or just opinion, in this ever-connected world there is a war going on for your head space.

There are marketed ads tailored to preferences specific to behaviors and information linked to your browser actions & history. Television and radio is similarly trying to sway you based on varying degrees of “expert” opinion or showing group buy-in or support en masse for certain products, services, people, or ideas.

This is unnervingly similar to a buffer overflow attack, and hot-button issues especially are the payload, where (if we allow it) portions of the campaign end up overwriting what we accept as true fact, leaving our beliefs in control of others with differing agendas. But how does this even happen?

In phishing awareness training I’ve taught employees to guard your emotions because attackers prey on them to their advantage. Feigning emergent situations to place you in, in order to gain a reaction (sometimes any reaction) is in their favor.

Disinformation Overflow (as I’ll call it), usually stems from this same emotional reaction, a sense of urgency where we’re being shown something so absolutely outrageous that it could not possibly be true and yet an individual is pleading for action. And who are we if not compassionate and reactive people?

This is the basis of why Cambridge Analytica (and their parent company “SCL Elections”) was so successful in their social media strategies. They were able to target, isolate, segregate, or coalesce people based on certain affiliations, make them rally around these affiliations (all online) and then manifest these affiliations into an actual presence culminating in voter turnout. They crafted a specific campaign, based on known beliefs and only slightly modified those beliefs based on their contracted goals. This was worse than Disinformation Overflow, this was Disinformation Overflow As-A-Service!

Trust is a slippery slope. Once it is gained it should not be taken for granted. You can be certain that the shady actors that have it, know the gold they possess. It pains then to read news articles about police departments creating their own disinformation campaigns luring criminals to bring drugs into their local precinct because of reports of the drugs being tainted with COVID-19. It’s true that police often use deception, and to be fair this was done prior to the virus spreading into its current pandemic state, but broadly obvious disinformation campaigns posted on social media like this one do nothing but sow additional public distrust in the very institution that always seems to be fighting for it back.

Technofakery

To add to the confusion breakfast the artificial-intelligence & machine-learning pancake enter the mix. Tools using these technologies enable the creation of deepfakes like the one of former president Barack Obama. Now before you say that this is not near to realistic remember a couple of things:

- If it appeared on your beat up small iPhone, would you be able to tell the difference?

- This was done in 2018. Keeping in mind that tech capability increases at exponential rates over years, a similar video today would be better.

Is it tin-foil hat time?

Ha, no. This post is not about protecting your brain against the harmful mind control waves emanating from your Facebook account. Sometimes guarding against something means we simply see it and recognize it for what it is, or at least what it could be. Not real. If you have belief in something or someone it should not be something that is shaken easily regardless of the news cycle. How do you do this?

I don’t let any single news outlet determine my acceptance of any specific reported event. Seeing it on multiple news outlets (especially ones that cater to different party lines), gives more credence to the report.

Some stories are just that. Snopes.com is great for ferreting out the truth on some of these unbelievable events (past and present).

Separate yourself from your biases. Ask yourself tough questions here as these biases can make you a target for some of these campaigns.

Separate your beliefs from your biases. To put it bluntly, everyone has a button that can be pushed, and it makes us a target for these types of attacks where some of us will accept any propaganda linked to these beliefs.

Respect. Respect yourself. Respect others. Respect others right to be completely and utterly incorrect without having to tell them how incorrect they are.

Before retweeting, responding, or any other type of public resonating about something you’ve read or watched review 1-5 above.